In the name of the law, stop the disrespect!

The past year has affected all of us in one way or another but have you ever thought about what effect it may have had on our language? Philipp Wicke and Mariana Bolognesi did just that in their study of thousands of tweets posted during March and April 2020.

Due to social distancing measures, people were quick to use social media platforms like Twitter to connect with others and express their feelings, sending around 16,000 tweets an hour with hashtags like #coronavirus, #Covid-19 and #Covid. The researchers wanted to explore this online discourse and were particularly interested in how the pandemic was discussed using the metaphor of war. Discourse about disease has often been found to use this metaphor and cancer patients frequently complain that they are described as being in a 'battle' with the illness, which they find negative and unhelpful. With this in mind, Wicke and Bolognesi decided to also explore other figurative ways in which Covid was being described.

They collected 25,000 tweets a day that contained at least one of eight covid-related hashtags. Retweets were not included nor were more than one tweet per user in order to gain a balanced view of language use. 5.32% of the collected tweets mentioned war, the most common words being 'fight' (29.76% of these mentions) and 'war' (10.08%), whilst 'combat', 'threat' and 'battle' were also prevalent. The researchers noted that this could reflect this early stage of the pandemic: it was a global emergency and urgent action was needed to confront the situation. Most of these examples referred specifically to the treatment of the virus and the 'frontline' workers dealing with its effects in hospital.

When they concentrated on other figurative ways in which Covid was being described they found it referred to in terms of a storm, a monster and a tsunami. For example, the idea of the virus as a storm arose in 1.49% of the tweets and contained words like 'thunderstorm', 'rain' and 'lightning'; 1.13% of the tweets referred to a tsunami, using words like 'earthquake', 'disaster' and 'tide' and references to a monster occurred in 0.68% of the tweets with 'freak', 'demon' and 'devil' being prime examples. These negative images mainly referred to the onset and spread of the virus. It is clear, however, that the war metaphor was used significantly more than these others.

Wicke and Bolognesi conclude that their results confirm previous findings that the war metaphor is common in public discourse of disease; however, they found that it was used very particularly during the first weeks of the pandemic to refer to the initial medical response to it. They also suggest that all of these metaphors are negative and unhelpful and propose the construction of a 'Metaphor Menu', previously suggested with regards to cancer, to give the public more positive and desirable ways to talk about Covid 19 as the pandemic evolves and changes.

------------------------------------

Wicke P., M. M. Bolognesi (2020) Framing COVID-19: How we conceptualize and discuss the pandemic on Twitter. PLoS ONE 15(9): e0240010.

https://doi.org/10.1371/journal.pone.0240010

This summary was written by Gemma Stoyle

To understand what a metaphor is, let’s start by considering a real world example. For tipping point, we might imagine an object that should be kept upright, such as a vase of flowers, at risk of falling when it loses its centre of gravity. As the tipping point is not the vase’s natural state, we assume something has caused the unbalance e.g. a curious cat, or an earthquake. We also realise that there will be consequences when this tipping point is reached, and we expect these will be negative. The glass is likely to smash, causing danger to bare feet, and destroying the vase. The contents will also spill onto the floor, possibly spreading water and causing further damage, or possibly partly retrievable if some of the flowers can be rescued. But we recognise that reaching the tipping point is very unlikely to have many positive outcomes, as it takes us from our pretty vase of flowers, to an undesirable mess on the floor.

The job of a metaphor is to map the knowledge we have from these real-world examples, onto something else. But whereas the source domain of a metaphor relates to concrete entities (such as the unlucky vase), the target domain will be a more abstract and complex phenomenon. Therefore, how the metaphor is used - the linguistic and discursive context - will help to shape how we conceptualise this target.

From the 1960s onwards, tipping point was regularly mapped onto moments of social change, most popularly in the early 2000s to describe the sudden spread of a new trend or idea in society. Conventionally, tipping point came to be used as an everyday expression meaning a time of important, often uncontrollable, things happening that lead to change. It was most often applied to an individual, reaching a personal tipping point and joining a wider group or process in society. Notably, the more negative interpretations were largely removed, and the metaphor was seen as more exciting than threatening. Over time, the metaphorical mapping had therefore become somewhat ‘bleached’ of its source domain.

But from 2004 scientists began to use this metaphor in relation to the world’s climate, and it is now common to hear and read about ecological tipping points in the media. Van der Hel, Hellsten & Steen (2018) examined 326 articles from major world newspapers, and 301 scientific articles, to see how this metaphor developed and was used from 2005-2014. They looked for both the discursive context of the metaphor (Who is using the phrase? What is it being used to refer to?) and the linguistic characteristics of its use (How is the phrase combined with different parts of speech and punctuation? Is the use of the phrase deliberately metaphorical?) From this study they tracked the changing use and meanings of tipping point in both the media and science.

The metaphor was first used by scientists as an attempt to explain to the media their complex research into abrupt changes in the climate system. Use of the phrase at this time drew attention to its metaphorical meaning, rather than the conventional one, by also expressing related ideas of falling, danger and irreversibility. By making explicit references to the source domain in this way, the metaphorical meaning is more ‘deliberate’, and actively encourages us to reflect more on the concrete meaning of the expression. Therefore the metaphor highlighted the serious and threatening nature of a tipping point and, by extension, the catastrophic issue of climate change.

Until this time tipping point in climate change news articles had mainly been used in the conventional sense, to refer to changes in individual social attitudes towards the environment. But from 2005-2007 the phrase began to appear more frequently in inverted commas, to note its increasing, and unfamiliar, use by scientists. The phrase also began to be used in reference to humanity as a whole, in contrast to the previous convention of usually referring to an individual in society. This use of punctuation and the new collocations again serve to focus attention on the metaphorical status of the phrase. In doing so, a reader may be encouraged to draw deeper on their source domain knowledge, and reconsider the metaphor’s meaning.

Within the scientific community, use of the metaphor continued to increase from 2008. But whereas it may have begun as a rhetorical device, it subsequently became a mainstream scientific concept, and a theoretical tool. The imagery of a tipping point largely replaced some earlier metaphors (e.g. thresholds), and studies explored what the causes and outcomes of different potential tipping points might mean in various climate contexts. This suggests an inspirational role for the metaphor, through capturing the imagination of scientists, and opening new directions for studies.In contrast, the media used the metaphor less after 2007, but it re-emerged from 2011 in news reports from political speeches at international climate conferences. In these reports, it was increasingly tied to specific locations (e.g. the Amazon) and events, and was often still expressed using inverted commas. However the phrase also began to be used for other, non-climate related changes, such as sudden policy shifts. This suggests that the tipping point metaphor in the media had become more flexible, again incorporating the conventional expression of a drastic change.

This study shows that in science and the

media, a metaphor can help explain complex ideas, and encourage new ways of

thinking about a phenomenon. It also demonstrates their versatility: tracing

how a metaphor that had become an everyday expression was mapped onto a new

target domain, leading to a restructured understanding. By examining the

linguistic and discursive contexts of tipping

point, Van der Hel, Hellsten & Steen (2018) highlight the numerous and

changing roles that metaphors can play, and how they can help scientists and

journalists in public debates on important topics.

------------------------------------------------------------

van der Hel, S., Hellsten, I. and Steen, G., 2018. Tipping

points and climate change: Metaphor between science and the media. Environmental Communication, 12(5), pp.605-620.

This summary was written by Sarah Kirk-Browne

When we talk to each other, we don’t just rely on words. Emotion is embodied, and our expressions, our body language, our tone of

voice are all used to convey our feelings and affect how our words are

interpreted. But for online written communication, we can’t rely on these

details. As discussed in the previous post, punctuation can be helpful to

represent tone of voice, but often there is still something missing. In the fifth

chapter of her pop linguistics book Because Internet, Gretchen McCulloch

explores how emoji became popular as a way of replicating gestures in online

communication.

Emoji cannot be considered a language: there is a limit to what

can be expressed, and most languages can handle meta-level vocabulary about

language, which emoji cannot. But they clearly do something. However, many popular

emoji use hand and facial gestures, which, McCulloch says, inspired her to begin treating

them as gesture.

There are two types of gesture which emoji can represent: the

first are called emblems. These are nameable gestures, and have precise forms

and stable meanings, and are often culturally specific, such as winking, giving

a thumbs up, and obscene hand gestures. Many of these have directly equivalent emoji, for example, fingers crossed

🤞, rolling

eyes 🙄, or a

peace sign ✌️. Some emoji

are more metaphorical, such as the eggplant emoji as a phallic symbol, but,

with knowledge of internet norms, they still have fixed meanings. Emoji are not

the only way to express emblems online: reaction gifs and images are also used

to express specific moods or actions, many of which we can refer to by name

(for example, most internet-literate people will know what I mean by Michael

Jackson Eating Popcorn.gif).

The second type of gesture with corresponding emoji are

illustrative or co-speech gestures. These gestures are dependent on surrounding

speech, and highlight or reinforce the topic. You often make these without

realising, and at times when they make little sense, such as waving your hands

around when on the phone and your conversational partner can’t see you. These

gestures don’t have specific names but can be described. Think of the way you

move you your hands when giving somebody directions or describing the size of

something. These gestures are also represented in emoji. The example McCulloch

uses is the range of emojis possible in a ‘Happy Birthday’ message, perhaps a

combination of the following 🎂🍰🎁🎊🎉🎈🥳. In these contexts, the order doesn’t matter, these emoji aren’t

telling a story, they are adding to the current one. Illustrative emoji are

also more likely to be taken at face value, and don’t necessarily require

knowledge of internet culture that, for example the eggplant emoji might

require. If emblems are for the benefit of the listener, then illustrative

gesture are for the benefit of the speaker, used to help them get their message

across.

McCulloch also examines common sequences of emoji, finding that,

unlike words, emoji are often repeated, both as a straightforward sequence of

the same emoji multiple times (the most common being 😂), and

sequences of different emoji that are linked thematically, such as the series

of birthday related emoji above, or a series of love emoji such as 💕💓😍💗🥰💖. This is

another reason why emoji can be considered gesture: repetition does not

generally occur in our words, but does occur in hand gestures.

Repetitive gestures are known as beat gestures: they are

rhythmic, and if you stutter while you speak, your gestures also do the same.

Emoji also do this: we type 👍👍👍 to represent a sustained or repeated

thumbs up gesture in real life. We can even repeat emoji which don’t have a

literal gesture attached, because, as a whole, emoji can be repeated. The ‘clap

back’ is a common beat gesture among African American women, and this is often

represented through emoji as a form of emphasis: 👏 WHAT 👏

ARE 👏

YOU 👏

DOING 👏

Emoji serve an important purpose in informal written

communication, filling in for expression and gesture which otherwise are hard

to convey. For more from McCulloch on the topic of emoji and gesture, Episode 34 of her podcast Lingthusiasm

with Lauren Gawne, discusses the content in this chapter, and provides several

further links on the topic of emoji and gesture.

McCulloch, Gretchen. 2019. Because Internet: Understanding the New Rules of Language. New York: Riverhead Books.

This summary was written by Rhona Graham

As a result of technology, many of our casual, everyday

conversations now take place online, in written form. This has in turn changed

how we write informally, which is the topic of Gretchen McCulloch’s 2019 book Because

Internet. This book focuses on how the internet is changing language and is

written for a general audience. Chapter 4 discusses how we convey our emotions

through written language, and the history of these conventions. Conversational

writing has caused us to find innovative ways of replicating our speech in our

writing, both our words, and our tone, which McCulloch calls typographical tone

of voice.

Why is it so much scarier to receive a text saying “ok.”

than “ok”? In online messaging, we tend to use line or message breaks, rather

than full stops, to convey the end of an utterance. Full stops are associated

with falling intonation (in the same way that a question mark indicates rising

intonation), which doesn’t often occur in actual speech, and many of our

messages are designed to replicate speech. In some contexts (like “ok.”),

implied falling intonation can be interpreted as passive-aggressive or angry,

which has been noticed in the media since 2013.

Some conventions, particularly those for strong emotion,

have been around a lot longer than the internet. For example, YOU ARE PROBABLY

SHOUTING THIS SENTENCE IN YOUR HEAD, because for at least a century, capital

letters have been a way of expressing strong emotion. Another is repeating

letters, particularly in emotive words, such as “yayyy” or “nooo”. This also

predates the internet, with the earliest example coming from 1848, and gaining

popularity throughout the 20th century in sounds such as “ahhh” or

“hmmm”. In a 2011 study of Twitter, sentiment words were the most common to be

lengthened in this way: examples being “ugh” “lmao” “damn” and “nice”.

McCulloch also discusses the ways in which we soften a

message, to come across as friendly or approachable. The exclamation point has

progressed from signifying ‘excitement’ to being associated with ‘warmth’ and ‘sincerity’,

which is why most younger people would prefer to receive a text saying “ok!”

than “ok”. ‘lol’, rather than meaning that you are actually laughing out loud,

has taken on the function of polite laughter, and smiley faces (i.e., emoticons/

emojis) have the same impact, tempering the tone of a message, making it appear

friendlier.

One area of interest is how we indicate that we are being

sarcastic without outright saying #sarcasm (which, after all, would defeat the

point of being sarcastic). In speech, sarcasm is conveyed through tone of voice

and facial expressions: in written text, we need a way to signify these

additional meanings, without explicitly stating that we’re making a joke. While

many options have been officially suggested, these don’t tend to stick. One

that has is the sarcasm tilde (~), which McCulloch argues derives from the mid-2000s

days of MySpace ‘sparkle punctuation’, where users used punctuation marks for

aesthetic purposes. Now, using ~ in a message indicates that it isn’t serious,

which we then, based on context, can interpret as irony or sarcasm: McCulloch

calls this ‘sparkle sarcasm’. The sarcasm tilde also can also be seen as a

literal representation of the way in which your tone rises and falls when being

sarcastic.

|

| A Tumblr post from 2016 |

Ironic emphasis is also an interesting area to examine. The Tumblr post above, a screenshot from 2016, shows just some of the ways in which emphasis was conveyed. Unexpected capital letters, spacing out letters, using hashtags or TM are all used by the author to add emphasis.

However, it is interesting to note that the rest of this sentence is devoid of punctuation: there is no full stop, ‘tumblr’ is uncapitalized, and neither is ‘i’ at the beginning of the sentence. McCulloch calls this lack of punctuation ‘minimalist typography’ and discusses how this is used to convey tone of voice, particularly in the current era of smartphones. With predictive text, writing a sentence without capitalising the first word, or even writing ‘i’ requires extra effort: even while writing this post, my word processor automatically capitalises a single i, and I have to go back to retype it. This extra effort conveys meaning to the reader through absence: the capital letters in an otherwise uncapitalized sentence indicates that the author has used these typographic forms for some specific reason. McCulloch describes minimalist punctuation as “an open canvas, inviting you to fill in the gaps”.

These are just some of the ways in which we reflect our tone

in typed speech, from caps lock, to passive aggressive punctuation. Given the

developments that have occurred in the last twenty years alone, it is highly

likely that these conventions will continue to change, and generations to come

will develop their own conventions for irony, passive aggression, and humour. Because

Internet is a fascinating snapshot of how language is being used on the

internet currently, and I do recommend it as an enjoyable and interesting read.

McCulloch, Gretchen. 2019. Because Internet:

Understanding the New Rules of Language. New York: Riverhead Books.

This summary was written by Rhona Graham

(a) wen mi ier di bap bap, mi drap a groun an den

when I heard the bap bap [the shots], I fell to the ground and then

mi staat ron.

I started to run.

(b) When I heard the shot (bap, bap), I drop the gun, and then I run.

|

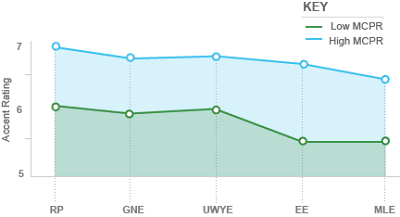

| Is accent bias decreasing or is this just 'age-grading'? |

|

| Degree of expertise and accent rating |

|

| More prejudiced listeners were more likely to downrate all of the accents |